- 29 Posts

- 21 Comments

8·3 months ago

8·3 months agoIf they spend weeks convincing you that you should do it, give you money or other resources to do it, or so on, then it can be entrapment.

Things like that should theoretically help you make an argument for entrapment, but it’s no sure thing

51·4 months ago

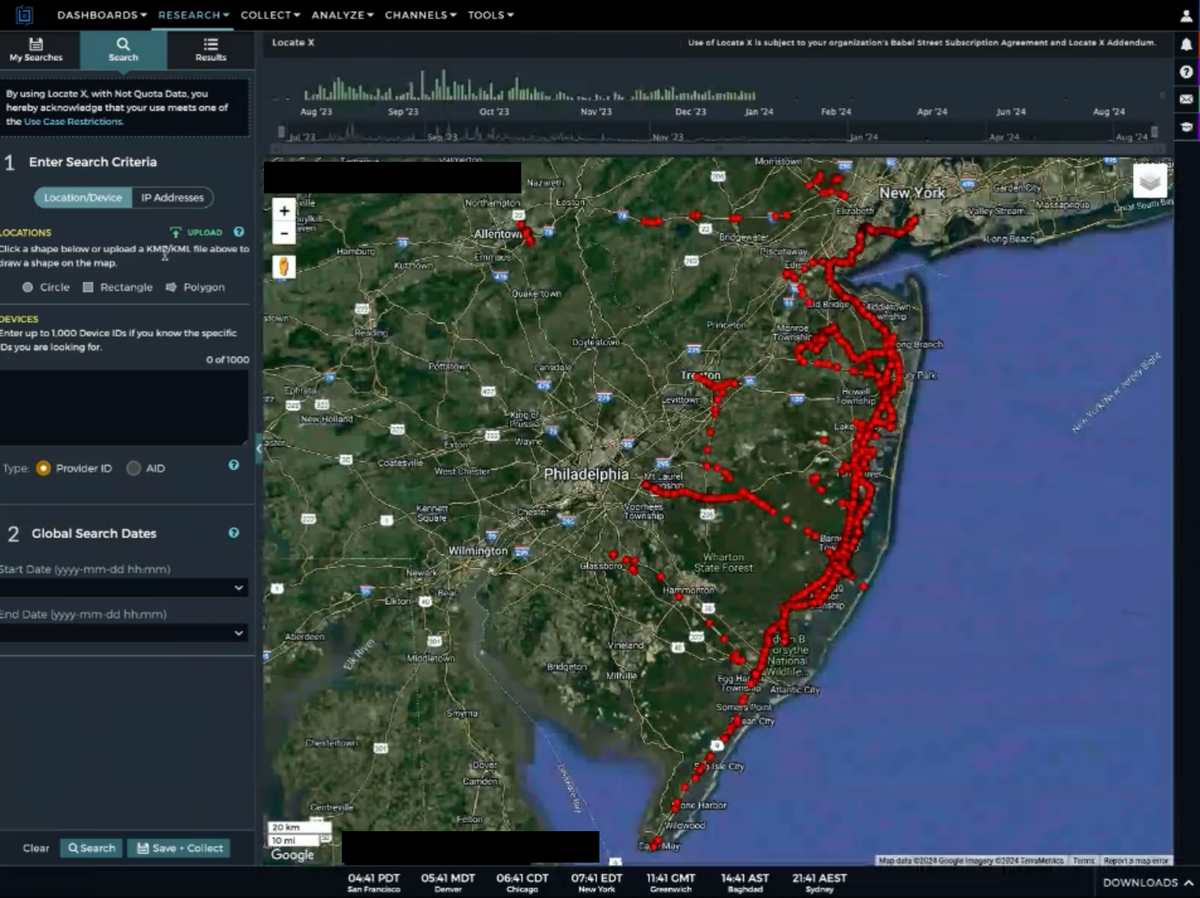

51·4 months agoGood question I don’t have the answer to. I could speculate that this is all likely being sourced from some sort of marketing material that ShadowDragon put out where they just flatly say they’re gathering this information from Tesseract, and in reality they’re actually gathering any information they can on users who search for this software and download this software, but like I said I’m speculating.

If you’re really interested, I would say you should email the author of this article, reach out to Tesseract’s development team, or find a way to get a subpoena against ShadowDragon and/or ICE

6·4 months ago

6·4 months agoSorry to hear that, try this one

1·5 months ago

1·5 months agoRelated, “Wife of 72-year-old veteran beaten to death by a cellmate in Alabama jail sues corrections officers” (archived)

e; Actually, this needs a clarification. This news story is about a jail facility in Alabama, whereas this documentary is about a prison facility. Generally speaking, prisons are where people are said after they are convicted of crimes, jails are where they are held before trial if they are unable to post bail. Both ultimately report to the same state department of corrections, but these are separate facilities.

21·8 months ago

21·8 months agoDo you think the department of education writes the textbooks, standardized tests (SAT, ACT, etc.), grading and student management software, learning management systems (Google Classroom, Canvas), or manufactures its own classroom tech (Chromebooks, tablets)?

Each one of those has a bunch of particular nuances, but in general - yeah, I think they could and should in a lot of those cases

The education system is full of for-profit businesses that can jack up the prices, and they do.

Yeah, it’s a big problem with a lot of little parts to be tackled

The DOE simply doesn’t have the resources to create these things themselves

Then government should give them the resources (actually, I think a whole separate agency that develops open source software for any government agency or anyone else who wants to use them should be established, but that’s kind of besides the point).

and would cost them far more if they tried

I don’t think that’s true, and even if it were I think we should be willing to pay premium to make sure essential systems that support the public good are being administered in democratic ways (e.g. by public agencies that are required to give public reports to elected lawmakers and be subject to citizens’ FOIA requests).

the business model has existed forever

A lot of stupid ideas hang on for a really long time. Like, we still have monarchies in the 21st century world.

Personally, I’m more concerned with the use of Google products in schools. A company that’s sole business is harvesting user data and selling it to advertisers should have no place in schools or children’s products. But they’ve embedded themselves into everything so people just accept it at the cost of privacy

I 100% agree this is a significant problem too, I just haven’t come across any good articles about it recently

51·8 months ago

51·8 months agoExactly, they’re a captive audience, and moreover they are legally incompetent to consent to a contracted business relationship like this

If this was a department of education AI or even some kind of transparently administered non-profit organization I’d be fine with this, but the fact that this is being developed for some for profit company that can just jack their rates and cut off public schools whenever they want to is bullshit. Like, I’m not opposed to the technology of LLMs at all, I think they’re actually pretty neat, but our social and economic systems have a lot of exploitative trash in them that cool technologies can inadvertently exacerbate.

423·9 months ago

423·9 months agoAn American security contractor and a Chinese embassy employee are at a bar. The American says, “I gotta say, your propaganda is impressive. You sure know how to keep your people in line.”

“Oh, you’re too gracious,” the embassy worker says. “And besides, it’s nothing compared to American propaganda.”

The contractor chokes on his drink and gives his friend a bewildered look.

“What are you talking about? There’s no propaganda in America.”

There are more than three things

Not everything that is worth discussing has a source. Abstract ideas and hypothetical scenarios (among other things) have their places in rhetoric and communication.

A lot of people who think they’re saying “[actual fact]” are really just stating “[subjective opinion]” and call any criticism of their opinions “[incoherent rage]”

1·11 months ago

1·11 months agodeleted by creator

92·11 months ago

92·11 months agoYeah, I’m not a Texan but I also disagree about this. Also, Austin has produced some amazing music over the years (for example, random Austin band I’ve been in love with recently is Being Dead).

Gerrymandered Congress, no campaign finance laws, bought and paid for judiciary - Allow us to introduce ourselves

11·1 year ago

11·1 year agoThey’re very good at identifying talented developers /s

64·1 year ago

64·1 year agoThe devices should be returned to inmates immediately, prison administrators should then slap themselves in the face one time for implementing them poorly to begin with, slap themselves in the face several times for overreacting to a viral story without having any reason to believe there was an active or imminent problem with any of their inmates, and deliver a tooth-loosening punch to their own faces for thinking they could punish these inmates by taking away their education to cover their screw up.

After that, hire a real IT person who knows what they’re doing by paying them decently allowing remote work and not drug testing, and then listen to them.

135·1 year ago

135·1 year agoNot victimizing all of the student inmates because the prison invested in a poorly designed system that could potentially be exploited when none of the students have attempted that exploit or were likely even aware of it

152·1 year ago

152·1 year agoEvery prisoner who knew about that password

Meanwhile, back in reality

Wright confirmed no one incarcerated in Washington prisons had attempted to unlock their devices but said the decision was “made out of an abundance of caution.”

196·1 year ago

196·1 year agoThey were taken for reasons that inmates had nothing to do with, they have not been replaced, and it’s unclear when they’ll be returned. Inmates who are enrolled in college courses are having to handwrite papers that are due soon.

3·1 year ago

3·1 year agoAlso, if something is technically possible but illegal for the CIA/FBI/etc to do, it just means they have to try to hide the fact they’re still doing it

Also, it’s arbitrary and capricious - this is hitting everyone in the prison, regardless of their sentence, just because they happen to be incarcerated at the wrong place and time